Projects

Below are some cool projects I have worked on in the past.

I used to keep a full list of my publications, but I was much worse at maintaining it than Google Scholar. So, check that out for a publication list.

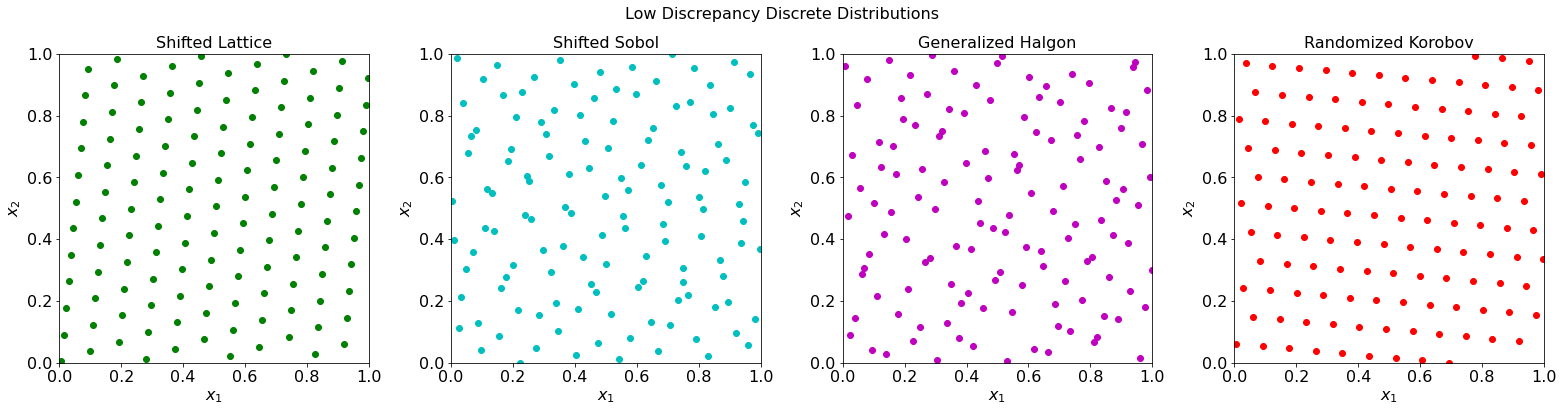

QMCPy

This is a long-time collaboration led by Fred Hickernell, Sou-Cheng Choi, Aleksei Sorokin and others investigating strategies to implement Quasi Monte Carlo methods in Python. I mostly help by cheering them on and supplying funds. MCM meeting in Chicago in July 2025!

Visit QMCPy

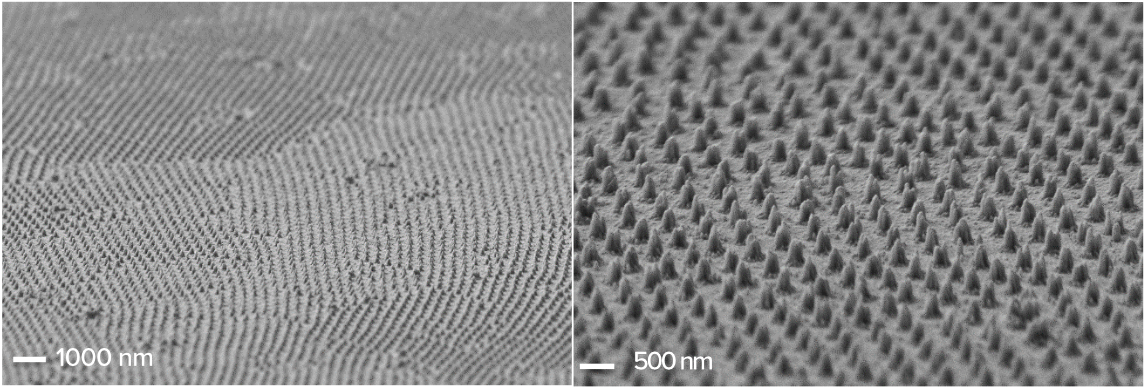

Glass Fabrication

This project involved materials design for optimally performing additive manufacturing using active learning and Bayesian optimization. Paul Leu led this project, which resulted in several great articles and fun times.

Visit LAMP group

GaussQR

A Matlab library supporting our textbook on kernel methods with new strategies for stable computation. One day I will work to adapt this to Python, but that has not yet happened.

GitHub

Team management

Kyle Emich and Li Lu invited me to collaborate on a project to better analyze how teams work using math. This was a great opportunity to learn how team psychology works and how success is measured.

Visit Kyle Emich

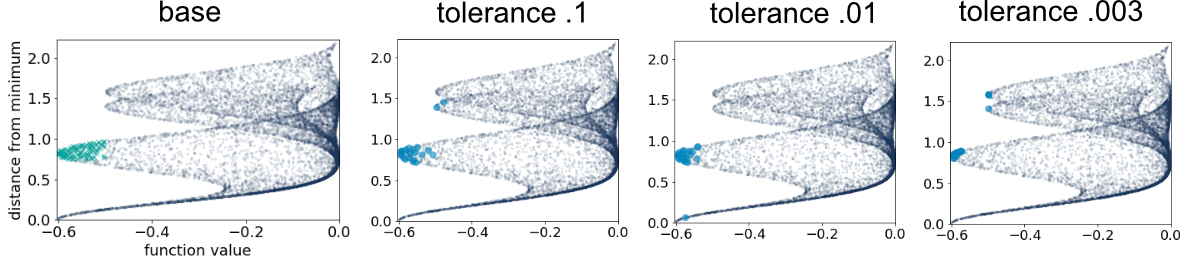

Prefopt

A sample efficient search for most preferred designs without an explicit objective. This code likely no longer runs, but the idea is what is important.

GitHub